So, you have an AI/ML use case and want to know how to build it out in the Cloud?

Table of Contents

Introduction

It’s the start of a new year which means new projects and

new initiatives to help your business gain a competitive advantage! You just

had your yearly kick-off meeting with your team and your boss told everyone

that the team will be working on an exciting new natural language processing

pipeline to conduct sentiment analyzes on customer conversations in your

contact center. Finally! A real ML project you can get hands-on with. Your

sprint task for the week is to evaluate what tools, technologies and frameworks

would be best suited for starting such a project and so you start doing your

research …..

I am sure the scenario I have described above sounds

familiar to a lot of us, this a very common scenario that many will come across

when working in AI/ML (or any technology for that matter), your business and

team has a new project that they are looking to start and your tasked with

understanding the current landscape of tools and technologies to help the team

decided where to begin.

In this blog post, I would like to offer a strategy on how to think about this specific problem as it relates to AI/ML tools with the goal to help you and your team make an informed decision the next time you are in this scenario.

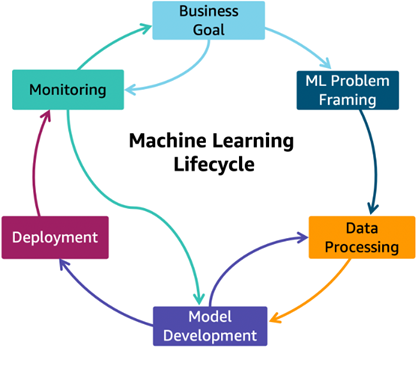

At a high level you can break down the Machine Learning project lifecycle to:

- Business Goal Definition

- Machine Learning Problem Framing

- Data Collection and Processing

- Model Development: Model Selection & Training

- Model Deployment (Inference)

- Monitoring

Figure 1. Adapted from: AWS Well-Architected Machine Learning Lifecycle

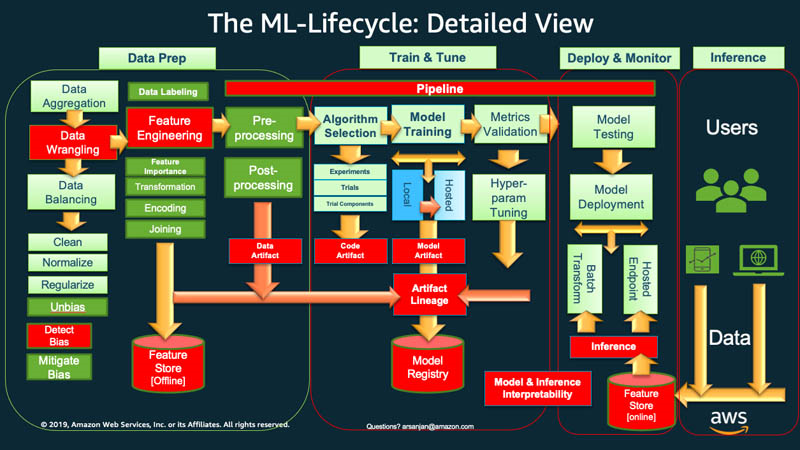

Figure 2. Detailed view of the technical phases of the ML Lifecycle by Ali Arsanjani

Each one of the technical steps (3-6) can be broken down in multiple sub task as shown in the above chart in figure 2. Software and tools have been created to support ML researcher, ML engineers and Data Scientists in each of these tasks. Often times the question becomes which tools are the best for your team and your current state of ML maturity?

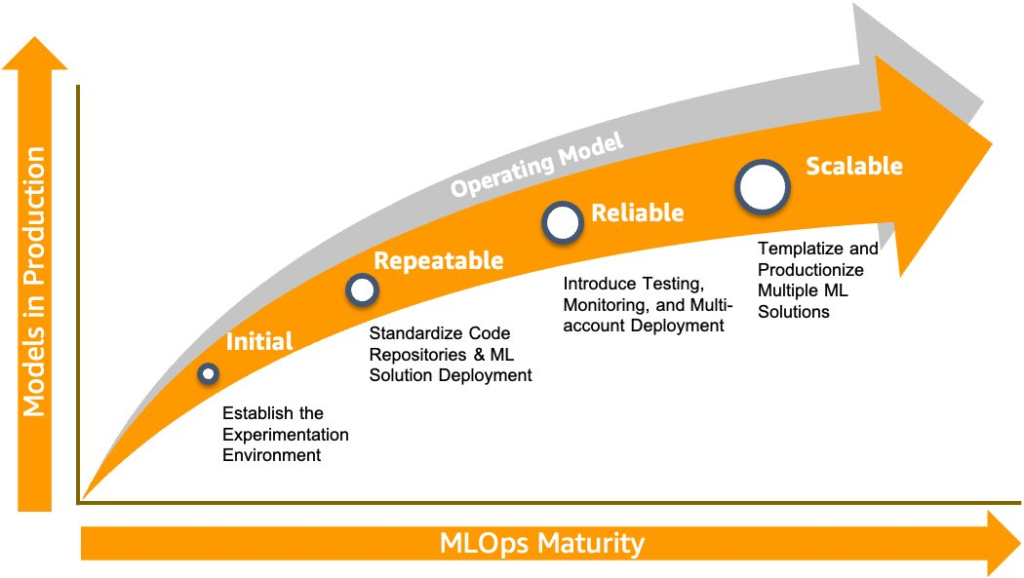

Figure 3. Adapted from AWS ML Maturity Model

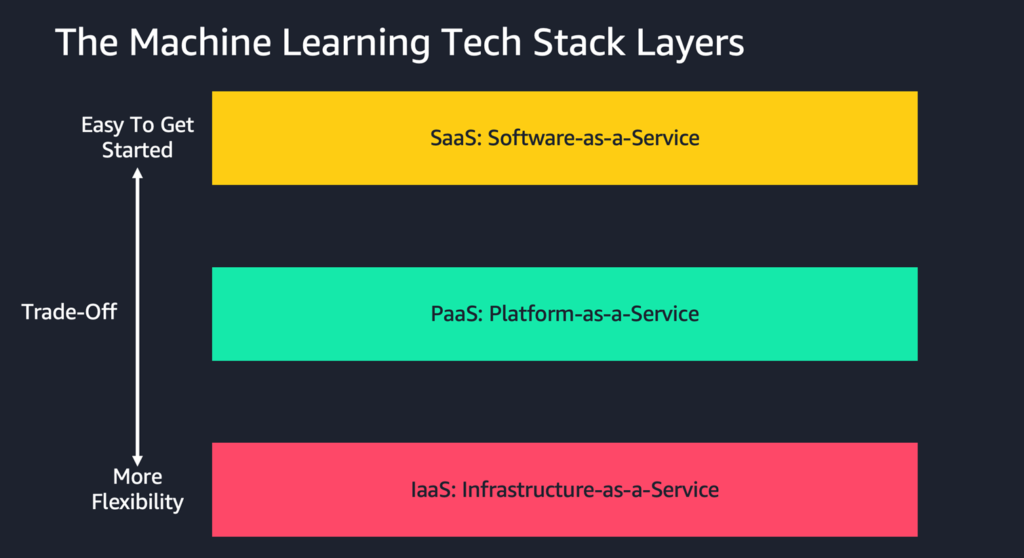

Regardless which vendor you are looking at you will find that most AI/ML tools fall into three layers: Infrastructure-as-a-Service, Platform-as-a-Service and Software-as-a-Service (or what I will call AI/ML-as-a-Service). These layers are shown in the below chart where the y-axis represents the level of effort your team will have to invest to get started. The y-axis also represents the level of flexibility/customization you will have at each layer. You will see that as you move down the y-axis that your team will require greater effort to get started but in return will also have more flexibility. You will see the trade-off between initial effort to get started vs. flexibility being a reoccurring theme as we discuss the advantages and disadvantages of operating at each of these layers.

Figure 4. High level view of the trade-offs between the machine learning tech stack layers

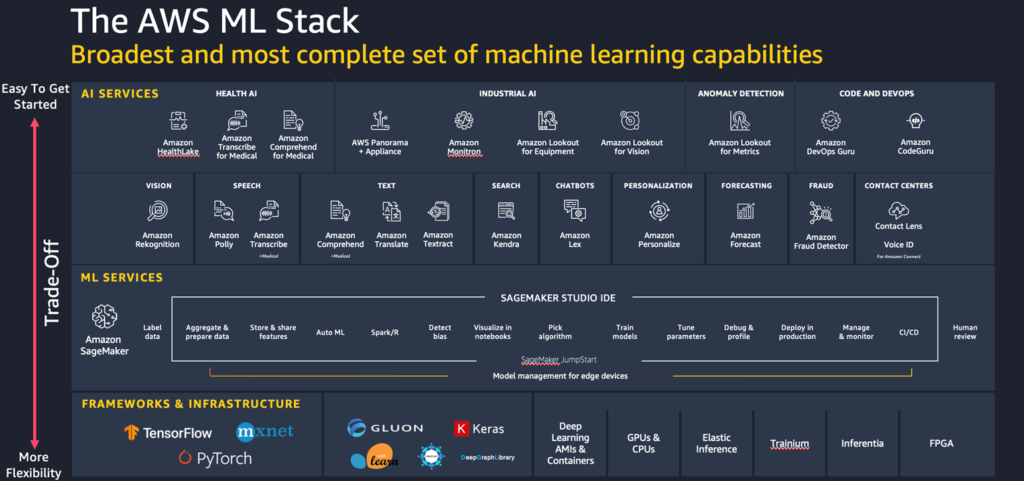

To make this more concreate, let’s review the available AI/ML services on Amazon Web Services (AWS) in the below AWS ML Stack chart. You will see that this chart has also has the same 3 layers that correspond with the layers I have described above.

Figure 5. The AWS Machine Learning Stack

AI services layer

The first layer is the AI services layer (which is the same as the SaaS layer in my above example), this is where AWS has made available to you state-of-the-art (SOTA) machine learning models for the most common use cases (such as image recognition, text extraction, speech-to-text etc.) that can be accessed through application programming interfaces (APIs). This means that you can get started with your AI/ML project quickly without the need for building, training and hosting your own AI/ML models. All you have to do to leverage these services is to bring your data and interact with the service through the APIs. As mentioned in Andrew Ng’s AI Transformation Playbook, this provides a great starting point for organizations that do not currently have AI/ML teams (or potentially have smaller 2-3 person teams) to evaluate the initial AI/ML projects and demonstrate to the business the value of investing in AI/ML. For many organizations the return-on-investment does not justify building out your own SOTA models for the common use cases especially when these models are readily available by most cloud vendors. However, in some niche scenarios you may find that your use case falls outside of the capabilities currently provided by these AI services, for example you may be looking for an NLP model for entity extraction and require specific entities (e.g. nouns such as police officers, nurses etc.) that are currently not available. Most AI services will offer you the capability to add additional functionality by bringing your own data, for example Amazon Comprehend will allow you to train custom entities by bringing your own data. This is definitively an approach that is worthwhile exploring but if you find that this still does not meet your requirements, this is when you would move down to the next layer – Platform-as-a-service.

Platform-as-a-service (PaaS) layer

The goal of the Platform-as-a-service (PaaS) layer is to provide an abstraction layer to remove the need for customers to configure and orchestrate underlying infrastructure required in the ML lifecycle (e.g. compute, storage, networking). If you consider each one of the technical steps in the ML lifecycle, each step has its own infrastructure requirements. For example, you may require a cluster of compute instances to support you in all the steps involved in data cleaning. You may also require another cluster of compute instances with specific GPU requirements for model training. And finally, you may need to setup another cluster to support you in model serving for inference. As you can imagine this requires a great deal of effort to setup and maintain. This is exactly the value proposition a PaaS platform provides. On the AWS Cloud, the PaaS layer for ML is comprised of the Amazon SageMaker platform of services. Amazon SageMaker provides ML teams with the capabilities to perform all of the technical steps in the ML workflow (as depicted in chart 1.x above) while abstracting away the need to interface directly with the underlying compute, storage and networking infrastructure. This is achieved using the Sagemaker SDK and can be done, all while remaining within a common notebook interface (whether that is a Jupyter, R etc.) and can be shared across teams. Because Amazon SageMaker abstracts away the underlying infrastructure configuration and orchestration, your AI/ML teams can now focus specifically on ML related tasks (e.g. provide examples). Operating in the PaaS layer for ML tools can be especially useful for smaller less mature ML teams that have the requirement for custom models that fall outside what is offering in the AI Services layer. ML platform tools like SageMaker give you the extra flexibility to build or bring your own models while also giving you the scaffolding to abstract way the need to configure and orchestrate underlying infrastructure.

Infrastructure-as-a-service (IaaS) layer

The last layer, we have the infrastructure-as-a-service (IaaS) where you have access to configure the underlying infrastructure to meet your specific requirements. On the AWS Cloud this is where you would have access to Deep Learning AMIs or Containers that come prepackaged with all of the most common ML frameworks (e.g. PyTorch, Tensorflow, MXnet etc.) and ML Tools (Scikit-learn, Pandas, Numpy etc.) as well as variety of instance class (e.g. GPU based instances or CPU based instances) to choose from based on your specific model requirements. The IaaS layer provides the greatest flexibility as you have the ability to pick and choose exactly the configuration of infrastructure you would like to use but in turn this also comes with the highest level of effort to get started. Many of the most advance AI/ML companies that we think such as Google, Facebook, Baidu, Netflix are required to operate in this layer as there use cases are specific and cutting edge. These organizations also have the large engineering teams and have acquired the level of ML maturity which justifies that requirement to operate at this level.

Conclusion

I hope you will see that the ML tool that you choose for a given ML project will be dependent on your use case, your organizations ML maturity as well as the size of your ML team. For most organizations that are newer to their ML journey with smaller ML teams I would encourage you to get started in the AI Services Layer and begin moving down the ML stack as improve your ML maturity and your ML use case require it. For companies, that have intermediate ML maturity you can I still believe it makes sense to leverage the AI services for SOTA models for the most common ML use case such as speech recognition, image recognition etc that do not add business differentiation or a competitive advantage. And for use case that a specific to your business that would add value I would encourage you to leverage a platform such as SageMaker to help your teams to get started quickly and the make the most of your resources.